polyreg module¶

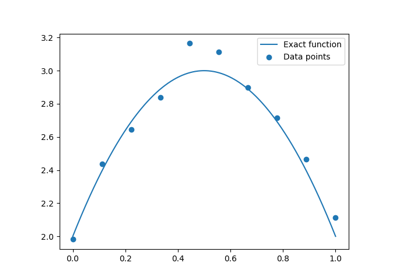

Polynomial regression model.

Polynomial regression is a particular case of the linear regression, where the input data is transformed before the regression is applied. This transform consists of creating a matrix of monomials by raising the input data to different powers up to a certain degree \(D\). In the case where there is only one input variable, the input data \((x_i)_{i=1, \dots, n}\in\mathbb{R}^n\) is transformed into the Vandermonde matrix:

The output variable is expressed as a weighted sum of monomials:

where the coefficients \(w_1, w_2, ..., w_d\) and the intercept \(w_0\) are estimated by least square regression.

In the case of a multidimensional input, i.e. \(X = (x_{ij})_{i=1,\dots,n; j=1,\dots,m}\), where \(n\) is the number of samples and \(m\) is the number of input variables, the Vandermonde matrix is expressed through different combinations of monomials of degree \(d, (1 \leq d \leq D)\); e.g. for three variables \((x, y, z)\) and degree \(D=3\), the different terms are \(x\), \(y\), \(z\), \(x^2\), \(xy\), \(xz\), \(y^2\), \(yz\), \(z^2\), \(x^3\), \(x^2y\) etc. More generally, for \(m\) input variables, the total number of monomials of degree \(1 \leq d \leq D\) is given by \(P = \binom{m+D}{m} = \frac{(m+D)!}{m!D!}\). In the case of 3 input variables given above, the total number of monomial combinations of degree lesser than or equal to three is thus \(P = \binom{6}{3} = 20\). The linear regression has to identify the coefficients \(w_1, \dots, w_P\), in addition to the intercept \(w_0\).

Dependence¶

The polynomial regression model relies on the LinearRegression and PolynomialFeatures classes of the scikit-learn library.

- class gemseo.mlearning.regression.polyreg.PolynomialRegressor(data, degree, transformer=mappingproxy({}), input_names=None, output_names=None, fit_intercept=True, penalty_level=0.0, l2_penalty_ratio=1.0, **parameters)[source]

Bases:

LinearRegressorPolynomial regression model.

- Parameters:

data (IODataset) – The learning dataset.

degree (int) – The polynomial degree.

transformer (TransformerType) –

The strategies to transform the variables. The values are instances of

Transformerwhile the keys are the names of either the variables or the groups of variables, e.g."inputs"or"outputs"in the case of the regression algorithms. If a group is specified, theTransformerwill be applied to all the variables of this group. IfIDENTITY, do not transform the variables.By default it is set to {}.

input_names (Iterable[str] | None) – The names of the input variables. If

None, consider all the input variables of the learning dataset.output_names (Iterable[str] | None) – The names of the output variables. If

None, consider all the output variables of the learning dataset.fit_intercept (bool) –

Whether to fit the intercept.

By default it is set to True.

penalty_level (float) –

The penalty level greater or equal to 0. If 0, there is no penalty.

By default it is set to 0.0.

l2_penalty_ratio (float) –

The penalty ratio related to the l2 regularization. If 1, the penalty is the Ridge penalty. If 0, this is the Lasso penalty. Between 0 and 1, the penalty is the ElasticNet penalty.

By default it is set to 1.0.

**parameters (float | int | str | bool | None) – The parameters of the machine learning algorithm.

- Raises:

ValueError – If the degree is lower than one.

- get_coefficients(as_dict=False)[source]

Return the regression coefficients of the linear model.

- Parameters:

as_dict (bool) –

If

True, return the coefficients as a dictionary of Numpy arrays indexed by the names of the coefficients. Otherwise, return the coefficients as a Numpy array. For now the only valid value is False.By default it is set to False.

- Returns:

The regression coefficients of the linear model.

- Raises:

NotImplementedError – If the coefficients are required as a dictionary.

- Return type:

- load_algo(directory)[source]

Load a machine learning algorithm from a directory.

- Parameters:

directory (str | Path) – The path to the directory where the machine learning algorithm is saved.

- Return type:

None

- SHORT_ALGO_NAME: ClassVar[str] = 'PolyReg'

The short name of the machine learning algorithm, often an acronym.

Typically used for composite names, e.g.

f"{algo.SHORT_ALGO_NAME}_{dataset.name}"orf"{algo.SHORT_ALGO_NAME}_{discipline.name}".

- algo: Any

The interfaced machine learning algorithm.

- learning_set: Dataset

The learning dataset.

- transformer: dict[str, Transformer]

The strategies to transform the variables, if any.

The values are instances of

Transformerwhile the keys are the names of either the variables or the groups of variables, e.g. “inputs” or “outputs” in the case of the regression algorithms. If a group is specified, theTransformerwill be applied to all the variables of this group.