Note

Click here to download the full example code

Parameter space¶

In this example,

we will see the basics of ParameterSpace.

from matplotlib import pyplot as plt

from gemseo.algos.parameter_space import ParameterSpace

from gemseo.api import configure_logger, create_discipline, create_scenario

configure_logger()

Out:

<RootLogger root (INFO)>

Create a parameter space¶

Firstly,

the creation of a ParameterSpace does not require any mandatory argument:

parameter_space = ParameterSpace()

Then, we can add either deterministic variables

from their lower and upper bounds

(use ParameterSpace.add_variable())

or uncertain variables from their distribution names and parameters

(use ParameterSpace.add_random_variable())

parameter_space.add_variable("x", l_b=-2.0, u_b=2.0)

parameter_space.add_random_variable("y", "SPNormalDistribution", mu=0.0, sigma=1.0)

print(parameter_space)

Out:

+----------------------------------------------------------------------------+

| Parameter space |

+------+-------------+-------+-------------+-------+-------------------------+

| name | lower_bound | value | upper_bound | type | Initial distribution |

+------+-------------+-------+-------------+-------+-------------------------+

| x | -2 | None | 2 | float | |

| y | -inf | 0 | inf | float | norm(mu=0.0, sigma=1.0) |

+------+-------------+-------+-------------+-------+-------------------------+

We can check that the deterministic and uncertain variables are implemented as deterministic and deterministic variables respectively:

print("x is deterministic: ", parameter_space.is_deterministic("x"))

print("y is deterministic: ", parameter_space.is_deterministic("y"))

print("x is uncertain: ", parameter_space.is_uncertain("x"))

print("y is uncertain: ", parameter_space.is_uncertain("y"))

Out:

x is deterministic: True

y is deterministic: False

x is uncertain: False

y is uncertain: True

Sample from the parameter space¶

We can sample the uncertain variables from the ParameterSpace

and get values either as a NumPy array (by default)

or as a dictionary of NumPy arrays indexed by the names of the variables:

sample = parameter_space.compute_samples(n_samples=2, as_dict=True)

print(sample)

sample = parameter_space.compute_samples(n_samples=4)

print(sample)

Out:

[{'y': array([0.24590712])}, {'y': array([-0.1142415])}]

[[-0.01742018]

[-1.00092256]

[ 1.94369262]

[-0.777003 ]]

Sample a discipline over the parameter space¶

We can also sample a discipline over the parameter space.

For simplicity,

we instantiate an AnalyticDiscipline from a dictionary of expressions:

discipline = create_discipline("AnalyticDiscipline", expressions_dict={"z": "x+y"})

From these parameter space and discipline,

we build a DOEScenario

and execute it with a Latin Hypercube Sampling algorithm and 100 samples.

Warning

A DOEScenario considers all the variables

available in its DesignSpace.

By inheritance,

in the special case of a ParameterSpace,

a DOEScenario considers all the variables

available in this ParameterSpace.

Thus,

if we do not filter the uncertain variables,

the DOEScenario will consider

both the deterministic variables as uniformly distributed variables

and the uncertain variables with their specified probability distributions.

scenario = create_scenario(

[discipline], "DisciplinaryOpt", "z", parameter_space, scenario_type="DOE"

)

scenario.execute({"algo": "lhs", "n_samples": 100})

Out:

INFO - 14:41:39:

INFO - 14:41:39: *** Start DOE Scenario execution ***

INFO - 14:41:39: DOEScenario

INFO - 14:41:39: Disciplines: AnalyticDiscipline

INFO - 14:41:39: MDOFormulation: DisciplinaryOpt

INFO - 14:41:39: Algorithm: lhs

INFO - 14:41:39: Optimization problem:

INFO - 14:41:39: Minimize: z(x, y)

INFO - 14:41:39: With respect to: x, y

INFO - 14:41:39: DOE sampling: 0%| | 0/100 [00:00<?, ?it]

INFO - 14:41:39: DOE sampling: 100%|██████████| 100/100 [00:00<00:00, 2232.53 it/sec, obj=-1.22]

INFO - 14:41:39: Optimization result:

INFO - 14:41:39: Objective value = -1.8744085267228487

INFO - 14:41:39: The result is feasible.

INFO - 14:41:39: Status: None

INFO - 14:41:39: Optimizer message: None

INFO - 14:41:39: Number of calls to the objective function by the optimizer: 100

INFO - 14:41:39: +------------------------------------------------------------------------------------------+

INFO - 14:41:39: | Parameter space |

INFO - 14:41:39: +------+-------------+---------------------+-------------+-------+-------------------------+

INFO - 14:41:39: | name | lower_bound | value | upper_bound | type | Initial distribution |

INFO - 14:41:39: +------+-------------+---------------------+-------------+-------+-------------------------+

INFO - 14:41:39: | x | -2 | -1.959995425007306 | 2 | float | |

INFO - 14:41:39: | y | -inf | 0.08558689828445752 | inf | float | norm(mu=0.0, sigma=1.0) |

INFO - 14:41:39: +------+-------------+---------------------+-------------+-------+-------------------------+

INFO - 14:41:39: *** DOE Scenario run terminated ***

{'eval_jac': False, 'algo': 'lhs', 'n_samples': 100}

We can export the optimization problem to a Dataset:

dataset = scenario.formulation.opt_problem.export_to_dataset(name="samples")

and visualize it in a tabular way:

print(dataset.export_to_dataframe())

Out:

design_parameters functions

x y z

0 0 0

0 1.869403 0.893701 2.763103

1 -1.567970 0.999490 -0.568480

2 0.282640 0.459495 0.742135

3 1.916313 0.967722 2.884034

4 1.562653 0.721075 2.283728

.. ... ... ...

95 0.120633 0.371654 0.492286

96 -0.999225 0.928048 -0.071178

97 -1.396066 0.165332 -1.230734

98 1.090093 0.589230 1.679323

99 -1.433207 0.217893 -1.215314

[100 rows x 3 columns]

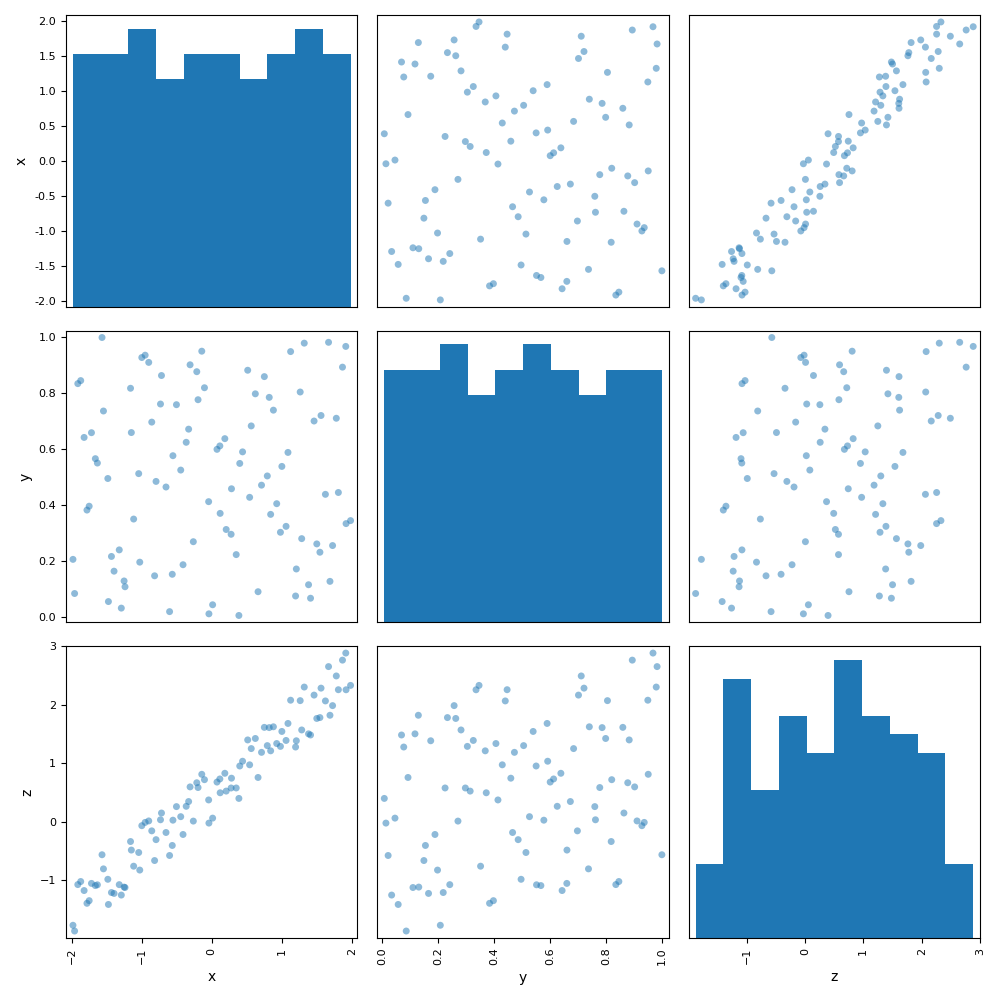

or with a graphical post-processing, e.g. a scatter plot matrix:

dataset.plot("ScatterMatrix", show=False)

# Workaround for HTML rendering, instead of ``show=True``

plt.show()

Sample a discipline over the uncertain space¶

If we want to sample a discipline over the uncertain space, we need to extract it:

uncertain_space = parameter_space.extract_uncertain_space()

Then, we clear the cache, create a new scenario from this parameter space containing only the uncertain variables and execute it.

discipline.cache.clear()

scenario = create_scenario(

[discipline], "DisciplinaryOpt", "z", uncertain_space, scenario_type="DOE"

)

scenario.execute({"algo": "lhs", "n_samples": 100})

Out:

INFO - 14:41:40:

INFO - 14:41:40: *** Start DOE Scenario execution ***

INFO - 14:41:40: DOEScenario

INFO - 14:41:40: Disciplines: AnalyticDiscipline

INFO - 14:41:40: MDOFormulation: DisciplinaryOpt

INFO - 14:41:40: Algorithm: lhs

INFO - 14:41:40: Optimization problem:

INFO - 14:41:40: Minimize: z(y)

INFO - 14:41:40: With respect to: y

INFO - 14:41:40: DOE sampling: 0%| | 0/100 [00:00<?, ?it]

INFO - 14:41:40: DOE sampling: 100%|██████████| 100/100 [00:00<00:00, 2320.53 it/sec, obj=0.208]

INFO - 14:41:40: Optimization result:

INFO - 14:41:40: Objective value = 0.00417022004702574

INFO - 14:41:40: The result is feasible.

INFO - 14:41:40: Status: None

INFO - 14:41:40: Optimizer message: None

INFO - 14:41:40: Number of calls to the objective function by the optimizer: 100

INFO - 14:41:40: +------------------------------------------------------------------------------------------+

INFO - 14:41:40: | Parameter space |

INFO - 14:41:40: +------+-------------+---------------------+-------------+-------+-------------------------+

INFO - 14:41:40: | name | lower_bound | value | upper_bound | type | Initial distribution |

INFO - 14:41:40: +------+-------------+---------------------+-------------+-------+-------------------------+

INFO - 14:41:40: | y | -inf | 0.00417022004702574 | inf | float | norm(mu=0.0, sigma=1.0) |

INFO - 14:41:40: +------+-------------+---------------------+-------------+-------+-------------------------+

INFO - 14:41:40: *** DOE Scenario run terminated ***

{'eval_jac': False, 'algo': 'lhs', 'n_samples': 100}

Finally, we build a dataset from the disciplinary cache and visualize it. We can see that the deterministic variable ‘x’ is set to its default value for all evaluations, contrary to the previous case where we were considering the whole parameter space:

dataset = scenario.formulation.opt_problem.export_to_dataset(name="samples")

print(dataset.export_to_dataframe())

Out:

design_parameters functions

y z

0 0

0 0.260850 0.260850

1 0.346919 0.346919

2 0.709034 0.709034

3 0.827509 0.827509

4 0.017203 0.017203

.. ... ...

95 0.532655 0.532655

96 0.138781 0.138781

97 0.223134 0.223134

98 0.482878 0.482878

99 0.208007 0.208007

[100 rows x 2 columns]

Total running time of the script: ( 0 minutes 0.718 seconds)