Note

Click here to download the full example code

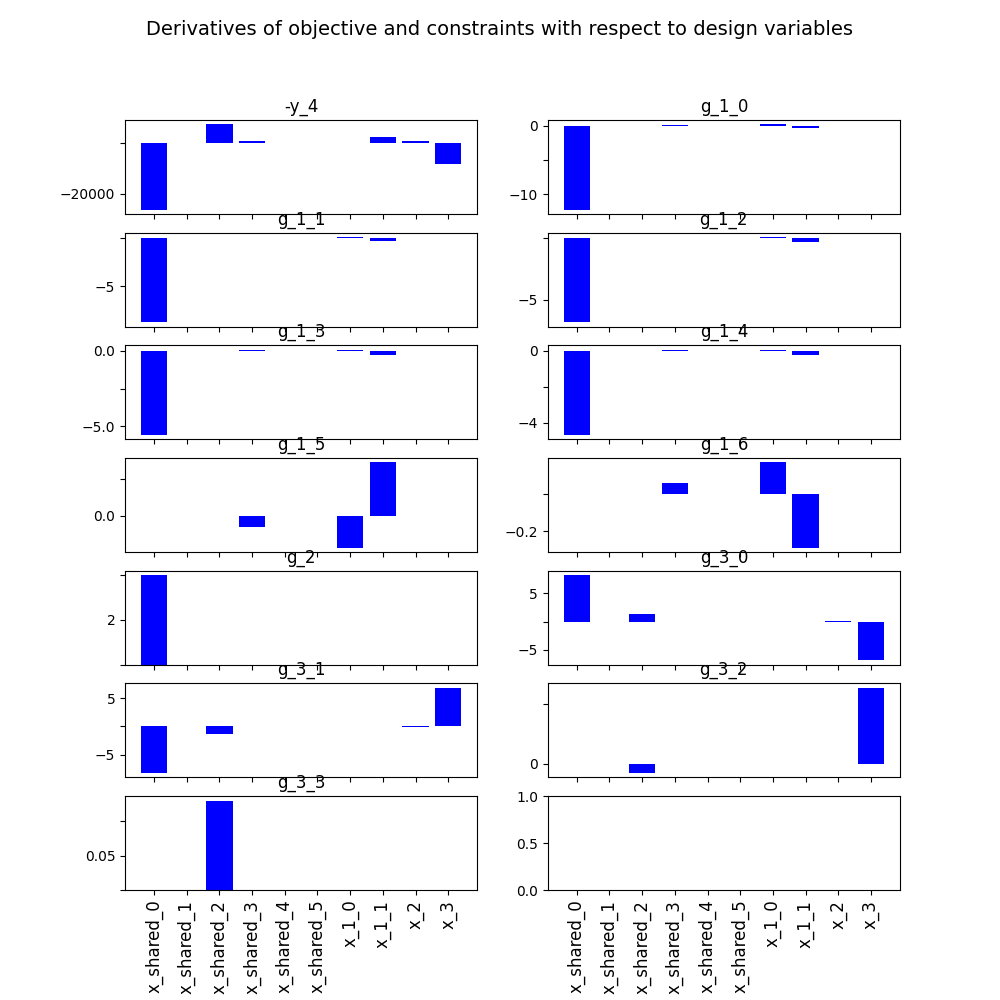

Gradient Sensitivity¶

In this example, we illustrate the use of the GradientSensitivity

plot on the Sobieski’s SSBJ problem.

from __future__ import annotations

from gemseo.api import configure_logger

from gemseo.api import create_discipline

from gemseo.api import create_scenario

from gemseo.problems.sobieski.core.problem import SobieskiProblem

from matplotlib import pyplot as plt

Import¶

The first step is to import some functions from the API and a method to get the design space.

configure_logger()

<RootLogger root (INFO)>

Description¶

The GradientSensitivity post-processor

builds histograms of derivatives of the objective and the constraints.

Create disciplines¶

At this point, we instantiate the disciplines of Sobieski’s SSBJ problem: Propulsion, Aerodynamics, Structure and Mission

disciplines = create_discipline(

[

"SobieskiPropulsion",

"SobieskiAerodynamics",

"SobieskiStructure",

"SobieskiMission",

]

)

Create design space¶

We also read the design space from the SobieskiProblem.

design_space = SobieskiProblem().design_space

Create and execute scenario¶

The next step is to build an MDO scenario in order to maximize the range,

encoded "y_4", with respect to the design parameters, while satisfying the

inequality constraints "g_1", "g_2" and "g_3". We can use the MDF

formulation, the SLSQP optimization algorithm and a maximum number of iterations

equal to 100.

scenario = create_scenario(

disciplines,

formulation="MDF",

objective_name="y_4",

maximize_objective=True,

design_space=design_space,

)

The differentiation method used by default is "user", which means that the

gradient will be evaluated from the Jacobian defined in each discipline. However, some

disciplines may not provide one, in that case, the gradient may be approximated

with the techniques "finite_differences" or "complex_step" with the method

set_differentiation_method(). The following line is shown as an

example, it has no effect because it does not change the default method.

scenario.set_differentiation_method("user")

for constraint in ["g_1", "g_2", "g_3"]:

scenario.add_constraint(constraint, "ineq")

scenario.execute({"algo": "SLSQP", "max_iter": 10})

INFO - 14:43:29:

INFO - 14:43:29: *** Start MDOScenario execution ***

INFO - 14:43:29: MDOScenario

INFO - 14:43:29: Disciplines: SobieskiAerodynamics SobieskiMission SobieskiPropulsion SobieskiStructure

INFO - 14:43:29: MDO formulation: MDF

INFO - 14:43:30: Optimization problem:

INFO - 14:43:30: minimize -y_4(x_shared, x_1, x_2, x_3)

INFO - 14:43:30: with respect to x_1, x_2, x_3, x_shared

INFO - 14:43:30: subject to constraints:

INFO - 14:43:30: g_1(x_shared, x_1, x_2, x_3) <= 0.0

INFO - 14:43:30: g_2(x_shared, x_1, x_2, x_3) <= 0.0

INFO - 14:43:30: g_3(x_shared, x_1, x_2, x_3) <= 0.0

INFO - 14:43:30: over the design space:

INFO - 14:43:30: +-------------+-------------+-------+-------------+-------+

INFO - 14:43:30: | name | lower_bound | value | upper_bound | type |

INFO - 14:43:30: +-------------+-------------+-------+-------------+-------+

INFO - 14:43:30: | x_shared[0] | 0.01 | 0.05 | 0.09 | float |

INFO - 14:43:30: | x_shared[1] | 30000 | 45000 | 60000 | float |

INFO - 14:43:30: | x_shared[2] | 1.4 | 1.6 | 1.8 | float |

INFO - 14:43:30: | x_shared[3] | 2.5 | 5.5 | 8.5 | float |

INFO - 14:43:30: | x_shared[4] | 40 | 55 | 70 | float |

INFO - 14:43:30: | x_shared[5] | 500 | 1000 | 1500 | float |

INFO - 14:43:30: | x_1[0] | 0.1 | 0.25 | 0.4 | float |

INFO - 14:43:30: | x_1[1] | 0.75 | 1 | 1.25 | float |

INFO - 14:43:30: | x_2 | 0.75 | 1 | 1.25 | float |

INFO - 14:43:30: | x_3 | 0.1 | 0.5 | 1 | float |

INFO - 14:43:30: +-------------+-------------+-------+-------------+-------+

INFO - 14:43:30: Solving optimization problem with algorithm SLSQP:

INFO - 14:43:30: ... 0%| | 0/10 [00:00<?, ?it]

INFO - 14:43:30: ... 20%|██ | 2/10 [00:00<00:00, 40.63 it/sec, obj=-2.12e+3]

WARNING - 14:43:30: MDAJacobi has reached its maximum number of iterations but the normed residual 1.4486313079508508e-06 is still above the tolerance 1e-06.

INFO - 14:43:30: ... 30%|███ | 3/10 [00:00<00:00, 23.56 it/sec, obj=-3.75e+3]

INFO - 14:43:30: ... 40%|████ | 4/10 [00:00<00:00, 17.21 it/sec, obj=-4.01e+3]

WARNING - 14:43:30: MDAJacobi has reached its maximum number of iterations but the normed residual 2.928004141058104e-06 is still above the tolerance 1e-06.

INFO - 14:43:30: ... 50%|█████ | 5/10 [00:00<00:00, 13.11 it/sec, obj=-4.49e+3]

INFO - 14:43:30: ... 60%|██████ | 6/10 [00:00<00:00, 11.11 it/sec, obj=-3.4e+3]

INFO - 14:43:31: ... 80%|████████ | 8/10 [00:01<00:00, 9.46 it/sec, obj=-4.76e+3]

INFO - 14:43:31: ... 100%|██████████| 10/10 [00:01<00:00, 8.23 it/sec, obj=-4.56e+3]

INFO - 14:43:31: ... 100%|██████████| 10/10 [00:01<00:00, 8.21 it/sec, obj=-4.56e+3]

INFO - 14:43:31: Optimization result:

INFO - 14:43:31: Optimizer info:

INFO - 14:43:31: Status: None

INFO - 14:43:31: Message: Maximum number of iterations reached. GEMSEO Stopped the driver

INFO - 14:43:31: Number of calls to the objective function by the optimizer: 12

INFO - 14:43:31: Solution:

INFO - 14:43:31: The solution is feasible.

INFO - 14:43:31: Objective: -3749.8868975554387

INFO - 14:43:31: Standardized constraints:

INFO - 14:43:31: g_1 = [-0.01671296 -0.03238836 -0.04350867 -0.05123129 -0.05681738 -0.13780658

INFO - 14:43:31: -0.10219342]

INFO - 14:43:31: g_2 = -0.0004062839430756249

INFO - 14:43:31: g_3 = [-0.66482546 -0.33517454 -0.11023156 -0.183255 ]

INFO - 14:43:31: Design space:

INFO - 14:43:31: +-------------+-------------+---------------------+-------------+-------+

INFO - 14:43:31: | name | lower_bound | value | upper_bound | type |

INFO - 14:43:31: +-------------+-------------+---------------------+-------------+-------+

INFO - 14:43:31: | x_shared[0] | 0.01 | 0.05989842901423112 | 0.09 | float |

INFO - 14:43:31: | x_shared[1] | 30000 | 59853.73840058666 | 60000 | float |

INFO - 14:43:31: | x_shared[2] | 1.4 | 1.4 | 1.8 | float |

INFO - 14:43:31: | x_shared[3] | 2.5 | 2.527371250092273 | 8.5 | float |

INFO - 14:43:31: | x_shared[4] | 40 | 69.86825198198687 | 70 | float |

INFO - 14:43:31: | x_shared[5] | 500 | 1495.734648986894 | 1500 | float |

INFO - 14:43:31: | x_1[0] | 0.1 | 0.4 | 0.4 | float |

INFO - 14:43:31: | x_1[1] | 0.75 | 0.7521124139939552 | 1.25 | float |

INFO - 14:43:31: | x_2 | 0.75 | 0.7520888531444992 | 1.25 | float |

INFO - 14:43:31: | x_3 | 0.1 | 0.1398000762238233 | 1 | float |

INFO - 14:43:31: +-------------+-------------+---------------------+-------------+-------+

INFO - 14:43:31: *** End MDOScenario execution (time: 0:00:01.233712) ***

{'max_iter': 10, 'algo': 'SLSQP'}

Post-process scenario¶

Lastly, we post-process the scenario by means of the GradientSensitivity

post-processor which builds histograms of derivatives of objective and constraints.

The sensitivities shown in the plot are calculated with the gradient at the optimum

or the least-non feasible point when the result is not feasible. One may choose any

other iteration instead.

Note

In some cases, the iteration that is being used to compute the sensitivities

corresponds to a point for which the algorithm did not request the evaluation of

the gradients, and a ValueError is raised. A way to avoid this issue is to set

the option compute_missing_gradients of GradientSensitivity to

True, this way GEMSEO will compute the gradients for the requested iteration if

they are not available.

Warning

Please note that this extra computation may be expensive depending on the

OptimizationProblem defined by the user. Additionally, keep in mind that

GEMSEO cannot compute missing gradients for an OptimizationProblem that was

imported from an HDF5 file.

Tip

Each post-processing method requires different inputs and offers a variety

of customization options. Use the API function

get_post_processing_options_schema() to print a table with

the options for any post-processing algorithm.

Or refer to our dedicated page:

Post-processing algorithms.

scenario.post_process(

"GradientSensitivity", save=False, show=False, compute_missing_gradients=True

)

# Workaround for HTML rendering, instead of ``show=True``

plt.show()

WARNING - 14:43:31: MDAJacobi has reached its maximum number of iterations but the normed residual 1.4486313079508508e-06 is still above the tolerance 1e-06.

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/4.1.0/lib/python3.9/site-packages/gemseo/post/gradient_sensitivity.py:201: UserWarning: FixedFormatter should only be used together with FixedLocator

axe.set_xticklabels(design_names, fontsize=font_size, rotation=rotation)

Total running time of the script: ( 0 minutes 2.362 seconds)