doe_scenario module¶

A scenario whose driver is a design of experiments.

- class gemseo.core.doe_scenario.DOEScenario(disciplines, formulation, objective_name, design_space, name=None, grammar_type='JSONGrammar', maximize_objective=False, **formulation_options)[source]¶

Bases:

ScenarioA multidisciplinary scenario to be executed by a design of experiments (DOE).

A

DOEScenariois a particularScenariowhose driver is a DOE. This DOE must be implemented in aDOELibrary.- Parameters:

disciplines (Sequence[MDODiscipline]) – The disciplines used to compute the objective, constraints and observables from the design variables.

formulation (str) – The class name of the

MDOFormulation, e.g."MDF","IDF"or"BiLevel".objective_name (str | Sequence[str]) – The name(s) of the discipline output(s) used as objective. If multiple names are passed, the objective will be a vector.

design_space (DesignSpace) – The search space including at least the design variables (some formulations requires additional variables, e.g.

IDFwith the coupling variables).name (str | None) – The name to be given to this scenario. If

None, use the name of the class.grammar_type (str) –

The type of grammar to declare the input and output variables either

JSON_GRAMMAR_TYPEorSIMPLE_GRAMMAR_TYPE.By default it is set to “JSONGrammar”.

maximize_objective (bool) –

Whether to maximize the objective.

By default it is set to False.

**formulation_options (Any) – The options of the

MDOFormulation.

- classmethod activate_time_stamps()¶

Activate the time stamps.

For storing start and end times of execution and linearizations.

- Return type:

None

- add_constraint(output_name, constraint_type='eq', constraint_name=None, value=None, positive=False, **kwargs)¶

Add a design constraint.

This constraint is in addition to those created by the formulation, e.g. consistency constraints in IDF.

The strategy of repartition of the constraints is defined by the formulation.

- Parameters:

output_name (str | Sequence[str]) – The names of the outputs to be used as constraints. For instance, if “g_1” is given and constraint_type=”eq”, g_1=0 will be added as constraint to the optimizer. If several names are given, a single discipline must provide all outputs.

constraint_type (str) –

The type of constraint, “eq” for equality constraint and “ineq” for inequality constraint.

By default it is set to “eq”.

constraint_name (str | None) – The name of the constraint to be stored. If

None, the name of the constraint is generated from the output name.value (float | None) – The value for which the constraint is active. If

None, this value is 0.positive (bool) –

If

True, the inequality constraint is positive.By default it is set to False.

- Raises:

ValueError – If the constraint type is neither ‘eq’ or ‘ineq’.

- Return type:

None

- add_differentiated_inputs(inputs=None)¶

Add the inputs against which to differentiate the outputs.

If the discipline grammar type is

MDODiscipline.JSON_GRAMMAR_TYPEand an input is either a non-numeric array or not an array, it will be ignored. If an input is declared as an array but the type of its items is not defined, it is assumed as a numeric array.If the discipline grammar type is

MDODiscipline.SIMPLE_GRAMMAR_TYPEand an input is not an array, it will be ignored. Keep in mind that in this case the array subtype is not checked.- Parameters:

inputs (Iterable[str] | None) – The input variables against which to differentiate the outputs. If None, all the inputs of the discipline are used.

- Raises:

ValueError – When the inputs wrt which differentiate the discipline are not inputs of the latter.

- Return type:

None

- add_differentiated_outputs(outputs=None)¶

Add the outputs to be differentiated.

If the discipline grammar type is

MDODiscipline.JSON_GRAMMAR_TYPEand an output is either a non-numeric array or not an array, it will be ignored. If an output is declared as an array but the type of its items is not defined, it is assumed as a numeric array.If the discipline grammar type is

MDODiscipline.SIMPLE_GRAMMAR_TYPEand an output is not an array, it will be ignored. Keep in mind that in this case the array subtype is not checked.- Parameters:

outputs (Iterable[str] | None) – The output variables to be differentiated. If None, all the outputs of the discipline are used.

- Raises:

ValueError – When the outputs to differentiate are not discipline outputs.

- Return type:

None

- add_namespace_to_input(name, namespace)¶

Add a namespace prefix to an existing input grammar element.

The updated input grammar element name will be

namespace+namespaces_separator+name.

- add_namespace_to_output(name, namespace)¶

Add a namespace prefix to an existing output grammar element.

The updated output grammar element name will be

namespace+namespaces_separator+name.

- add_observable(output_names, observable_name=None, discipline=None)¶

Add an observable to the optimization problem.

The repartition strategy of the observable is defined in the formulation class. When more than one output name is provided, the observable function returns a concatenated array of the output values.

- Parameters:

output_names (Sequence[str]) – The names of the outputs to observe.

observable_name (Sequence[str] | None) – The name to be given to the observable. If

None, the output name is used by default.discipline (MDODiscipline | None) – The discipline used to build the observable function. If

None, detect the discipline from the inner disciplines.

- Return type:

None

- add_status_observer(obs)¶

Add an observer for the status.

Add an observer for the status to be notified when self changes of status.

- Parameters:

obs (Any) – The observer to add.

- Return type:

None

- auto_get_grammar_file(is_input=True, name=None, comp_dir=None)¶

Use a naming convention to associate a grammar file to the discipline.

Search in the directory

comp_dirfor either an input grammar file namedname + "_input.json"or an output grammar file namedname + "_output.json".- Parameters:

is_input (bool) –

Whether to search for an input or output grammar file.

By default it is set to True.

name (str | None) – The name to be searched in the file names. If

None, use the name of the discipline class.comp_dir (str | Path | None) – The directory in which to search the grammar file. If None, use the

GRAMMAR_DIRECTORYif any, or the directory of the discipline class module.

- Returns:

The grammar file path.

- Return type:

- check_input_data(input_data, raise_exception=True)¶

Check the input data validity.

- check_jacobian(input_data=None, derr_approx='finite_differences', step=1e-07, threshold=1e-08, linearization_mode='auto', inputs=None, outputs=None, parallel=False, n_processes=2, use_threading=False, wait_time_between_fork=0, auto_set_step=False, plot_result=False, file_path='jacobian_errors.pdf', show=False, fig_size_x=10, fig_size_y=10, reference_jacobian_path=None, save_reference_jacobian=False, indices=None)¶

Check if the analytical Jacobian is correct with respect to a reference one.

If reference_jacobian_path is not None and save_reference_jacobian is True, compute the reference Jacobian with the approximation method and save it in reference_jacobian_path.

If reference_jacobian_path is not None and save_reference_jacobian is False, do not compute the reference Jacobian but read it from reference_jacobian_path.

If reference_jacobian_path is None, compute the reference Jacobian without saving it.

- Parameters:

input_data (dict[str, ndarray] | None) – The input data needed to execute the discipline according to the discipline input grammar. If None, use the

MDODiscipline.default_inputs.derr_approx (str) –

The approximation method, either “complex_step” or “finite_differences”.

By default it is set to “finite_differences”.

threshold (float) –

The acceptance threshold for the Jacobian error.

By default it is set to 1e-08.

linearization_mode (str) –

the mode of linearization: direct, adjoint or automated switch depending on dimensions of inputs and outputs (Default value = ‘auto’)

By default it is set to “auto”.

inputs (Iterable[str] | None) – The names of the inputs wrt which to differentiate the outputs.

outputs (Iterable[str] | None) – The names of the outputs to be differentiated.

step (float) –

The differentiation step.

By default it is set to 1e-07.

parallel (bool) –

Whether to differentiate the discipline in parallel.

By default it is set to False.

n_processes (int) –

The maximum simultaneous number of threads, if

use_threadingis True, or processes otherwise, used to parallelize the execution.By default it is set to 2.

use_threading (bool) –

Whether to use threads instead of processes to parallelize the execution; multiprocessing will copy (serialize) all the disciplines, while threading will share all the memory This is important to note if you want to execute the same discipline multiple times, you shall use multiprocessing.

By default it is set to False.

wait_time_between_fork (float) –

The time waited between two forks of the process / thread.

By default it is set to 0.

auto_set_step (bool) –

Whether to compute the optimal step for a forward first order finite differences gradient approximation.

By default it is set to False.

plot_result (bool) –

Whether to plot the result of the validation (computed vs approximated Jacobians).

By default it is set to False.

file_path (str | Path) –

The path to the output file if

plot_resultisTrue.By default it is set to “jacobian_errors.pdf”.

show (bool) –

Whether to open the figure.

By default it is set to False.

fig_size_x (float) –

The x-size of the figure in inches.

By default it is set to 10.

fig_size_y (float) –

The y-size of the figure in inches.

By default it is set to 10.

reference_jacobian_path (str | Path | None) – The path of the reference Jacobian file.

save_reference_jacobian (bool) –

Whether to save the reference Jacobian.

By default it is set to False.

indices (Iterable[int] | None) – The indices of the inputs and outputs for the different sub-Jacobian matrices, formatted as

{variable_name: variable_components}wherevariable_componentscan be either an integer, e.g. 2 a sequence of integers, e.g. [0, 3], a slice, e.g. slice(0,3), the ellipsis symbol (…) or None, which is the same as ellipsis. If a variable name is missing, consider all its components. If None, consider all the components of all theinputsandoutputs.

- Returns:

Whether the analytical Jacobian is correct with respect to the reference one.

- check_output_data(raise_exception=True)¶

Check the output data validity.

- Parameters:

raise_exception (bool) –

Whether to raise an exception when the data is invalid.

By default it is set to True.

- Return type:

None

- classmethod deactivate_time_stamps()¶

Deactivate the time stamps.

For storing start and end times of execution and linearizations.

- Return type:

None

- static deserialize(file_path)¶

Deserialize a discipline from a file.

- Parameters:

file_path (str | Path) – The path to the file containing the discipline.

- Returns:

The discipline instance.

- Return type:

- execute(input_data=None)¶

Execute the discipline.

This method executes the discipline:

Adds the default inputs to the

input_dataif some inputs are not defined in input_data but exist inMDODiscipline.default_inputs.Checks whether the last execution of the discipline was called with identical inputs, i.e. cached in

MDODiscipline.cache; if so, directly returnsself.cache.get_output_cache(inputs).Caches the inputs.

Checks the input data against

MDODiscipline.input_grammar.If

MDODiscipline.data_processoris not None, runs the preprocessor.Updates the status to

MDODiscipline.STATUS_RUNNING.Calls the

MDODiscipline._run()method, that shall be defined.If

MDODiscipline.data_processoris not None, runs the postprocessor.Checks the output data.

Caches the outputs.

Updates the status to

MDODiscipline.STATUS_DONEorMDODiscipline.STATUS_FAILED.Updates summed execution time.

- Parameters:

input_data (Mapping[str, Any] | None) – The input data needed to execute the discipline according to the discipline input grammar. If None, use the

MDODiscipline.default_inputs.- Returns:

The discipline local data after execution.

- Raises:

RuntimeError – When residual_variables are declared but self.run_solves_residuals is False. This is not supported yet.

- Return type:

- export_to_dataset(name=None, by_group=True, categorize=True, opt_naming=True, export_gradients=False)[source]¶

Export the database of the optimization problem to a

Dataset.The variables can be classified into groups:

Dataset.DESIGN_GROUPorDataset.INPUT_GROUPfor the design variables andDataset.FUNCTION_GROUPorDataset.OUTPUT_GROUPfor the functions (objective, constraints and observables).- Parameters:

name (str | None) – The name to be given to the dataset. If

None, use the name of theOptimizationProblem.database.by_group (bool) –

Whether to store the data by group in

Dataset.data, in the sense of one unique NumPy array per group. IfcategorizeisFalse, there is a unique group:Dataset.PARAMETER_GROUP`. IfcategorizeisTrue, the groups can be eitherDataset.DESIGN_GROUPandDataset.FUNCTION_GROUPifopt_namingisTrue, orDataset.INPUT_GROUPandDataset.OUTPUT_GROUP. Ifby_groupisFalse, store the data by variable names.By default it is set to True.

categorize (bool) –

Whether to distinguish between the different groups of variables. Otherwise, group all the variables in

Dataset.PARAMETER_GROUP`.By default it is set to True.

opt_naming (bool) –

Whether to use

Dataset.DESIGN_GROUPandDataset.FUNCTION_GROUPas groups. Otherwise, useDataset.INPUT_GROUPandDataset.OUTPUT_GROUP.By default it is set to True.

export_gradients (bool) –

Whether to export the gradients of the functions (objective function, constraints and observables) if the latter are available in the database of the optimization problem.

By default it is set to False.

- Returns:

A dataset built from the database of the optimization problem.

- Return type:

- get_all_inputs()¶

Return the local input data as a list.

The order is given by

MDODiscipline.get_input_data_names().

- get_all_outputs()¶

Return the local output data as a list.

The order is given by

MDODiscipline.get_output_data_names().

- get_attributes_to_serialize()¶

Define the names of the attributes to be serialized.

Shall be overloaded by disciplines

- static get_data_list_from_dict(keys, data_dict)¶

Filter the dict from a list of keys or a single key.

If keys is a string, then the method return the value associated to the key. If keys is a list of strings, then the method returns a generator of value corresponding to the keys which can be iterated.

- get_disciplines_in_dataflow_chain()¶

Return the disciplines that must be shown as blocks in the XDSM.

By default, only the discipline itself is shown. This function can be differently implemented for any type of inherited discipline.

- Returns:

The disciplines shown in the XDSM chain.

- Return type:

- get_disciplines_statuses()¶

Retrieve the statuses of the disciplines.

- get_expected_dataflow()¶

Return the expected data exchange sequence.

This method is used for the XDSM representation.

The default expected data exchange sequence is an empty list.

See also

MDOFormulation.get_expected_dataflow

- Returns:

The data exchange arcs.

- Return type:

list[tuple[gemseo.core.discipline.MDODiscipline, gemseo.core.discipline.MDODiscipline, list[str]]]

- get_expected_workflow()¶

Return the expected execution sequence.

This method is used for the XDSM representation.

The default expected execution sequence is the execution of the discipline itself.

See also

MDOFormulation.get_expected_workflow

- Returns:

The expected execution sequence.

- Return type:

- get_input_data(with_namespaces=True)¶

Return the local input data as a dictionary.

- get_input_data_names(with_namespaces=True)¶

Return the names of the input variables.

- get_input_output_data_names(with_namespaces=True)¶

Return the names of the input and output variables.

- Args:

- with_namespaces: Whether to keep the namespace prefix of the

output names, if any.

- get_inputs_asarray()¶

Return the local output data as a large NumPy array.

The order is the one of

MDODiscipline.get_all_outputs().- Returns:

The local output data.

- Return type:

- get_inputs_by_name(data_names)¶

Return the local data associated with input variables.

- Parameters:

data_names (Iterable[str]) – The names of the input variables.

- Returns:

The local data for the given input variables.

- Raises:

ValueError – When a variable is not an input of the discipline.

- Return type:

- get_local_data_by_name(data_names)¶

Return the local data of the discipline associated with variables names.

- Parameters:

data_names (Iterable[str]) – The names of the variables.

- Returns:

The local data associated with the variables names.

- Raises:

ValueError – When a name is not a discipline input name.

- Return type:

Generator[Any]

- get_optim_variables_names()¶

A convenience function to access the optimization variables.

- get_optimum()¶

Return the optimization results.

- Returns:

The optimal solution found by the scenario if executed,

Noneotherwise.- Return type:

OptimizationResult | None

- get_output_data(with_namespaces=True)¶

Return the local output data as a dictionary.

- get_output_data_names(with_namespaces=True)¶

Return the names of the output variables.

- get_outputs_asarray()¶

Return the local input data as a large NumPy array.

The order is the one of

MDODiscipline.get_all_inputs().- Returns:

The local input data.

- Return type:

- get_outputs_by_name(data_names)¶

Return the local data associated with output variables.

- Parameters:

data_names (Iterable[str]) – The names of the output variables.

- Returns:

The local data for the given output variables.

- Raises:

ValueError – When a variable is not an output of the discipline.

- Return type:

- get_sub_disciplines(recursive=False)¶

Determine the sub-disciplines.

This method lists the sub-disciplines’ disciplines. It will list up to one level of disciplines contained inside another one unless the

recursiveargument is set toTrue.- Parameters:

recursive (bool) –

If

True, the method will look inside any discipline that has other disciplines inside until it reaches a discipline without sub-disciplines, in this case the return value will not include any discipline that has sub-disciplines. IfFalse, the method will list up to one level of disciplines contained inside another one, in this case the return value may include disciplines that contain sub-disciplines.By default it is set to False.

- Returns:

The sub-disciplines.

- Return type:

- is_all_inputs_existing(data_names)¶

Test if several variables are discipline inputs.

- is_all_outputs_existing(data_names)¶

Test if several variables are discipline outputs.

- is_input_existing(data_name)¶

Test if a variable is a discipline input.

- is_output_existing(data_name)¶

Test if a variable is a discipline output.

- linearize(input_data=None, force_all=False, force_no_exec=False)¶

Execute the linearized version of the code.

- Parameters:

input_data (Mapping[str, Any] | None) – The input data needed to linearize the discipline according to the discipline input grammar. If None, use the

MDODiscipline.default_inputs.force_all (bool) –

If False,

MDODiscipline._differentiated_inputsandMDODiscipline._differentiated_outputsare used to filter the differentiated variables. otherwise, all outputs are differentiated wrt all inputs.By default it is set to False.

force_no_exec (bool) –

If True, the discipline is not re-executed, cache is loaded anyway.

By default it is set to False.

- Returns:

The Jacobian of the discipline.

- Return type:

- notify_status_observers()¶

Notify all status observers that the status has changed.

- Return type:

None

- post_process(post_name, **options)¶

Post-process the optimization history.

- Parameters:

post_name (str) – The name of the post-processor, i.e. the name of a class inheriting from

OptPostProcessor.**options (OptPostProcessorOptionType | Path) – The options for the post-processor.

- Return type:

- print_execution_metrics()¶

Print the total number of executions and cumulated runtime by discipline.

- Return type:

None

- remove_status_observer(obs)¶

Remove an observer for the status.

- Parameters:

obs (Any) – The observer to remove.

- Return type:

None

- reset_statuses_for_run()¶

Set all the statuses to

MDODiscipline.STATUS_PENDING.- Raises:

ValueError – When the discipline cannot be run because of its status.

- Return type:

None

- save_optimization_history(file_path, file_format='hdf5', append=False)¶

Save the optimization history of the scenario to a file.

- Parameters:

- Raises:

ValueError – If the file format is not correct.

- Return type:

None

- serialize(file_path)¶

Serialize the discipline and store it in a file.

- Parameters:

file_path (str | Path) – The path to the file to store the discipline.

- Return type:

None

- set_cache_policy(cache_type='SimpleCache', cache_tolerance=0.0, cache_hdf_file=None, cache_hdf_node_name=None, is_memory_shared=True)¶

Set the type of cache to use and the tolerance level.

This method defines when the output data have to be cached according to the distance between the corresponding input data and the input data already cached for which output data are also cached.

The cache can be either a

SimpleCacherecording the last execution or a cache storing all executions, e.g.MemoryFullCacheandHDF5Cache. Caching data can be either in-memory, e.g.SimpleCacheandMemoryFullCache, or on the disk, e.g.HDF5Cache.The attribute

CacheFactory.cachesprovides the available caches types.- Parameters:

cache_type (str) –

The type of cache.

By default it is set to “SimpleCache”.

cache_tolerance (float) –

The maximum relative norm of the difference between two input arrays to consider that two input arrays are equal.

By default it is set to 0.0.

cache_hdf_file (str | Path | None) – The path to the HDF file to store the data; this argument is mandatory when the

MDODiscipline.HDF5_CACHEpolicy is used.cache_hdf_node_name (str | None) – The name of the HDF file node to store the discipline data. If None,

MDODiscipline.nameis used.is_memory_shared (bool) –

Whether to store the data with a shared memory dictionary, which makes the cache compatible with multiprocessing.

By default it is set to True.

- Return type:

None

- set_differentiation_method(method='user', step=1e-06, cast_default_inputs_to_complex=False)¶

Set the differentiation method for the process.

When the selected method to differentiate the process is

complex_steptheDesignSpacecurrent value will be cast tocomplex128; additionally, if the optioncast_default_inputs_to_complexisTrue, the default inputs of the scenario’s disciplines will be cast as well provided that they arendarraywithdtypefloat64.- Parameters:

method (str | None) –

The method to use to differentiate the process, either

"user","finite_differences","complex_step"or"no_derivatives", which is equivalent toNone.By default it is set to “user”.

step (float) –

The finite difference step.

By default it is set to 1e-06.

cast_default_inputs_to_complex (bool) –

Whether to cast all float default inputs of the scenario’s disciplines if the selected method is

"complex_step".By default it is set to False.

- Return type:

None

- set_disciplines_statuses(status)¶

Set the sub-disciplines statuses.

To be implemented in subclasses.

- Parameters:

status (str) – The status.

- Return type:

None

- set_jacobian_approximation(jac_approx_type='finite_differences', jax_approx_step=1e-07, jac_approx_n_processes=1, jac_approx_use_threading=False, jac_approx_wait_time=0)¶

Set the Jacobian approximation method.

Sets the linearization mode to approx_method, sets the parameters of the approximation for further use when calling

MDODiscipline.linearize().- Parameters:

jac_approx_type (str) –

The approximation method, either “complex_step” or “finite_differences”.

By default it is set to “finite_differences”.

jax_approx_step (float) –

The differentiation step.

By default it is set to 1e-07.

jac_approx_n_processes (int) –

The maximum simultaneous number of threads, if

jac_approx_use_threadingis True, or processes otherwise, used to parallelize the execution.By default it is set to 1.

jac_approx_use_threading (bool) –

Whether to use threads instead of processes to parallelize the execution; multiprocessing will copy (serialize) all the disciplines, while threading will share all the memory This is important to note if you want to execute the same discipline multiple times, you shall use multiprocessing.

By default it is set to False.

jac_approx_wait_time (float) –

The time waited between two forks of the process / thread.

By default it is set to 0.

- Return type:

None

- set_optimal_fd_step(outputs=None, inputs=None, force_all=False, print_errors=False, numerical_error=2.220446049250313e-16)¶

Compute the optimal finite-difference step.

Compute the optimal step for a forward first order finite differences gradient approximation. Requires a first evaluation of the perturbed functions values. The optimal step is reached when the truncation error (cut in the Taylor development), and the numerical cancellation errors (round-off when doing f(x+step)-f(x)) are approximately equal.

Warning

This calls the discipline execution twice per input variables.

See also

https://en.wikipedia.org/wiki/Numerical_differentiation and “Numerical Algorithms and Digital Representation”, Knut Morken , Chapter 11, “Numerical Differentiation”

- Parameters:

inputs (Iterable[str] | None) – The inputs wrt which the outputs are linearized. If None, use the

MDODiscipline._differentiated_inputs.outputs (Iterable[str] | None) – The outputs to be linearized. If None, use the

MDODiscipline._differentiated_outputs.force_all (bool) –

Whether to consider all the inputs and outputs of the discipline;

By default it is set to False.

print_errors (bool) –

Whether to display the estimated errors.

By default it is set to False.

numerical_error (float) –

The numerical error associated to the calculation of f. By default, this is the machine epsilon (appx 1e-16), but can be higher when the calculation of f requires a numerical resolution.

By default it is set to 2.220446049250313e-16.

- Returns:

The estimated errors of truncation and cancellation error.

- Raises:

ValueError – When the Jacobian approximation method has not been set.

- set_optimization_history_backup(file_path, each_new_iter=False, each_store=True, erase=False, pre_load=False, generate_opt_plot=False)¶

Set the backup file for the optimization history during the run.

- Parameters:

file_path (str | Path) – The path to the file to save the history.

each_new_iter (bool) –

If

True, callback at every iteration.By default it is set to False.

each_store (bool) –

If

True, callback at every call to store() in the database.By default it is set to True.

erase (bool) –

If

True, the backup file is erased before the run.By default it is set to False.

pre_load (bool) –

If

True, the backup file is loaded before run, useful after a crash.By default it is set to False.

generate_opt_plot (bool) –

If

True, generate the optimization history view at backup.By default it is set to False.

- Raises:

ValueError – If both erase and pre_load are

True.- Return type:

None

- store_local_data(**kwargs)¶

Store discipline data in local data.

- Parameters:

**kwargs (Any) – The data to be stored in

MDODiscipline.local_data.- Return type:

None

- xdsmize(monitor=False, outdir='.', print_statuses=False, outfilename='xdsm.html', latex_output=False, open_browser=False, html_output=True, json_output=False)¶

Create a JSON file defining the XDSM related to the current scenario.

- Parameters:

monitor (bool) –

If

True, update the generated file at each discipline status change.By default it is set to False.

outdir (str | None) –

The directory where the JSON file is generated. If

None, the current working directory is used.By default it is set to “.”.

print_statuses (bool) –

If

True, print the statuses in the console at each update.By default it is set to False.

outfilename (str) –

The name of the file of the output. The basename is used and the extension is adapted for the HTML / JSON / PDF outputs.

By default it is set to “xdsm.html”.

latex_output (bool) –

If

True, build TEX, TIKZ and PDF files.By default it is set to False.

open_browser (bool) –

If

True, open the web browser and display the XDSM.By default it is set to False.

html_output (bool) –

If

True, output a self-contained HTML file.By default it is set to True.

json_output (bool) –

If

True, output a JSON file for XDSMjs.By default it is set to False.

- Return type:

None

- ALGO = 'algo'¶

- ALGO_OPTIONS = 'algo_options'¶

- APPROX_MODES = ['finite_differences', 'complex_step']¶

- AVAILABLE_MODES = ('auto', 'direct', 'adjoint', 'reverse', 'finite_differences', 'complex_step')¶

- AVAILABLE_STATUSES = ['DONE', 'FAILED', 'PENDING', 'RUNNING', 'VIRTUAL', 'LINEARIZE']¶

- COMPLEX_STEP = 'complex_step'¶

- EVAL_JAC = 'eval_jac'¶

- FINITE_DIFFERENCES = 'finite_differences'¶

- GRAMMAR_DIRECTORY: ClassVar[str | None] = None¶

The directory in which to search for the grammar files if not the class one.

- HDF5_CACHE = 'HDF5Cache'¶

- JSON_GRAMMAR_TYPE = 'JSONGrammar'¶

- L_BOUNDS = 'l_bounds'¶

- MEMORY_FULL_CACHE = 'MemoryFullCache'¶

- N_CPUS = 2¶

- N_SAMPLES = 'n_samples'¶

- RE_EXECUTE_DONE_POLICY = 'RE_EXEC_DONE'¶

- RE_EXECUTE_NEVER_POLICY = 'RE_EXEC_NEVER'¶

- SEED = 'seed'¶

- SIMPLE_CACHE = 'SimpleCache'¶

- SIMPLE_GRAMMAR_TYPE = 'SimpleGrammar'¶

- STATUS_DONE = 'DONE'¶

- STATUS_FAILED = 'FAILED'¶

- STATUS_LINEARIZE = 'LINEARIZE'¶

- STATUS_PENDING = 'PENDING'¶

- STATUS_RUNNING = 'RUNNING'¶

- STATUS_VIRTUAL = 'VIRTUAL'¶

- U_BOUNDS = 'u_bounds'¶

- X_0 = 'x_0'¶

- activate_counters: ClassVar[bool] = True¶

Whether to activate the counters (execution time, calls and linearizations).

- activate_input_data_check: ClassVar[bool] = True¶

Whether to check the input data respect the input grammar.

- activate_output_data_check: ClassVar[bool] = True¶

Whether to check the output data respect the output grammar.

- cache: AbstractCache | None¶

The cache containing one or several executions of the discipline according to the cache policy.

- property cache_tol: float¶

The cache input tolerance.

This is the tolerance for equality of the inputs in the cache. If norm(stored_input_data-input_data) <= cache_tol * norm(stored_input_data), the cached data for

stored_input_datais returned when callingself.execute(input_data).- Raises:

ValueError – When the discipline does not have a cache.

- data_processor: DataProcessor¶

A tool to pre- and post-process discipline data.

- property default_inputs: dict[str, Any]¶

The default inputs.

- Raises:

TypeError – When the default inputs are not passed as a dictionary.

- property design_space: DesignSpace¶

The design space on which the scenario is performed.

- property disciplines: list[gemseo.core.discipline.MDODiscipline]¶

The sub-disciplines, if any.

- property exec_time: float | None¶

The cumulated execution time of the discipline.

This property is multiprocessing safe.

- Raises:

RuntimeError – When the discipline counters are disabled.

- formulation: MDOFormulation¶

The MDO formulation.

- input_grammar: BaseGrammar¶

The input grammar.

- jac: dict[str, dict[str, ndarray]]¶

{input:

matrix}}``.

- Type:

The Jacobians of the outputs wrt inputs of the form ``{output

- property linearization_mode: str¶

The linearization mode among

MDODiscipline.AVAILABLE_MODES.- Raises:

ValueError – When the linearization mode is unknown.

- property local_data: DisciplineData¶

The current input and output data.

- property n_calls: int | None¶

The number of times the discipline was executed.

This property is multiprocessing safe.

- Raises:

RuntimeError – When the discipline counters are disabled.

- property n_calls_linearize: int | None¶

The number of times the discipline was linearized.

This property is multiprocessing safe.

- Raises:

RuntimeError – When the discipline counters are disabled.

- optimization_result: OptimizationResult¶

The optimization result.

- output_grammar: BaseGrammar¶

The output grammar.

- property post_factory: PostFactory | None¶

The factory of post-processors.

- residual_variables: Mapping[str, str]¶

The output variables mapping to their inputs, to be considered as residuals; they shall be equal to zero.

- seed: int¶

The seed used by the random number generators for reproducibility.

This seed is initialized at 0 and each call to

execute()increments it before using it.

- property status: str¶

The status of the discipline.

The status aims at monitoring the process and give the user a simplified view on the state (the process state = execution or linearize or done) of the disciplines. The core part of the execution is _run, the core part of linearize is _compute_jacobian or approximate jacobian computation.

- time_stamps = None¶

- property use_standardized_objective: bool¶

Whether to use the standardized objective for logging and post-processing.

The objective is

OptimizationProblem.objective.

Examples using DOEScenario¶

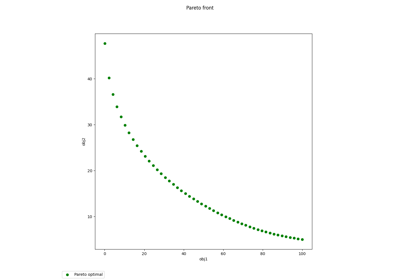

Pareto front on Binh and Korn problem using a BiLevel formulation

Simple disciplinary DOE example on the Sobieski SSBJ test case