Note

Go to the end to download the full example code

Machine learning algorithm selection example¶

In this example we use the MLAlgoSelection class to perform a grid

search over different algorithms and hyperparameter values.

from __future__ import annotations

import matplotlib.pyplot as plt

from numpy import linspace

from numpy import sort

from numpy.random import default_rng

from gemseo.algos.design_space import DesignSpace

from gemseo.datasets.io_dataset import IODataset

from gemseo.mlearning.core.selection import MLAlgoSelection

from gemseo.mlearning.quality_measures.mse_measure import MSEMeasure

rng = default_rng(54321)

Build dataset¶

The data are generated from the function \(f(x)=x^2\). The input data \(\{x_i\}_{i=1,\cdots,20}\) are chosen at random over the interval \([0,1]\). The output value \(y_i = f(x_i) + \varepsilon_i\) corresponds to the evaluation of \(f\) at \(x_i\) corrupted by a Gaussian noise \(\varepsilon_i\) with zero mean and standard deviation \(\sigma=0.05\).

n = 20

x = sort(rng.random(n))

y = x**2 + rng.normal(0, 0.05, n)

dataset = IODataset()

dataset.add_variable("x", x[:, None], dataset.INPUT_GROUP)

dataset.add_variable("y", y[:, None], dataset.OUTPUT_GROUP)

Build selector¶

We consider three regression models, with different possible hyperparameters. A mean squared error quality measure is used with a k-folds cross validation scheme (5 folds).

selector = MLAlgoSelection(

dataset, MSEMeasure, measure_evaluation_method_name="KFOLDS", n_folds=5

)

selector.add_candidate(

"LinearRegressor",

penalty_level=[0, 0.1, 1, 10, 20],

l2_penalty_ratio=[0, 0.5, 1],

fit_intercept=[True],

)

selector.add_candidate(

"PolynomialRegressor",

degree=[2, 3, 4, 10],

penalty_level=[0, 0.1, 1, 10],

l2_penalty_ratio=[1],

fit_intercept=[True, False],

)

rbf_space = DesignSpace()

rbf_space.add_variable("epsilon", 1, "float", 0.01, 0.1, 0.05)

selector.add_candidate(

"RBFRegressor",

calib_space=rbf_space,

calib_algo={"algo": "fullfact", "n_samples": 16},

smooth=[0, 0.01, 0.1, 1, 10, 100],

)

Select best candidate¶

best_algo = selector.select()

best_algo

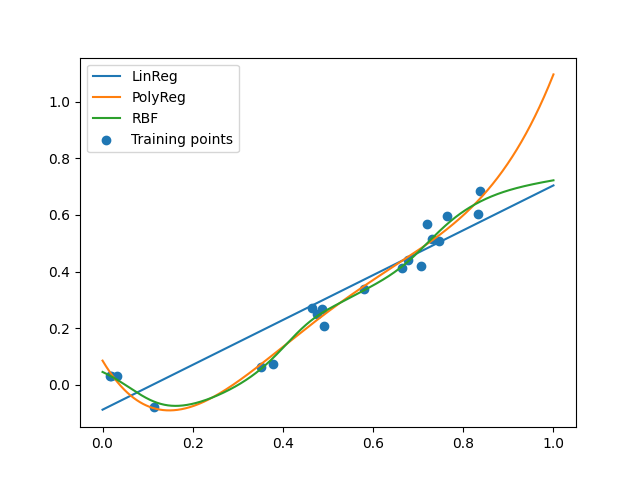

Plot results¶

Plot the best models from each candidate algorithm

finex = linspace(0, 1, 1000)

for candidate in selector.candidates:

algo = candidate[0]

print(algo)

predy = algo.predict(finex[:, None])[:, 0]

plt.plot(finex, predy, label=algo.SHORT_ALGO_NAME)

plt.scatter(x, y, label="Training points")

plt.legend()

plt.show()

LinearRegressor(fit_intercept=True, l2_penalty_ratio=0, penalty_level=0, random_state=0)

based on the scikit-learn library

built from 20 learning samples

PolynomialRegressor(degree=4, fit_intercept=True, l2_penalty_ratio=1, penalty_level=0, random_state=0)

based on the scikit-learn library

built from 20 learning samples

RBFRegressor(epsilon=0.1, function=multiquadric, norm=euclidean, smooth=0.1)

based on the SciPy library

built from 20 learning samples

Total running time of the script: (0 minutes 3.821 seconds)