Note

Go to the end to download the full example code

Quadratic approximations¶

In this example, we illustrate the use of the QuadApprox plot

on the Sobieski’s SSBJ problem.

from __future__ import annotations

from gemseo import configure_logger

from gemseo import create_discipline

from gemseo import create_scenario

from gemseo.problems.sobieski.core.design_space import SobieskiDesignSpace

Import¶

The first step is to import some high-level functions and a method to get the design space.

configure_logger()

<RootLogger root (INFO)>

Description¶

The QuadApprox post-processing

performs a quadratic approximation of a given function

from an optimization history

and plot the results as cuts of the approximation.

Create disciplines¶

Then, we instantiate the disciplines of the Sobieski’s SSBJ problem: Propulsion, Aerodynamics, Structure and Mission

disciplines = create_discipline([

"SobieskiPropulsion",

"SobieskiAerodynamics",

"SobieskiStructure",

"SobieskiMission",

])

Create design space¶

We also create the SobieskiDesignSpace.

design_space = SobieskiDesignSpace()

Create and execute scenario¶

The next step is to build an MDO scenario in order to maximize the range, encoded ‘y_4’, with respect to the design parameters, while satisfying the inequality constraints ‘g_1’, ‘g_2’ and ‘g_3’. We can use the MDF formulation, the SLSQP optimization algorithm and a maximum number of iterations equal to 100.

scenario = create_scenario(

disciplines,

"MDF",

"y_4",

design_space,

maximize_objective=True,

)

scenario.set_differentiation_method()

for constraint in ["g_1", "g_2", "g_3"]:

scenario.add_constraint(constraint, constraint_type="ineq")

scenario.execute({"algo": "SLSQP", "max_iter": 10})

INFO - 13:57:21:

INFO - 13:57:21: *** Start MDOScenario execution ***

INFO - 13:57:21: MDOScenario

INFO - 13:57:21: Disciplines: SobieskiAerodynamics SobieskiMission SobieskiPropulsion SobieskiStructure

INFO - 13:57:21: MDO formulation: MDF

INFO - 13:57:22: Optimization problem:

INFO - 13:57:22: minimize -y_4(x_shared, x_1, x_2, x_3)

INFO - 13:57:22: with respect to x_1, x_2, x_3, x_shared

INFO - 13:57:22: subject to constraints:

INFO - 13:57:22: g_1(x_shared, x_1, x_2, x_3) <= 0.0

INFO - 13:57:22: g_2(x_shared, x_1, x_2, x_3) <= 0.0

INFO - 13:57:22: g_3(x_shared, x_1, x_2, x_3) <= 0.0

INFO - 13:57:22: over the design space:

INFO - 13:57:22: +-------------+-------------+-------+-------------+-------+

INFO - 13:57:22: | Name | Lower bound | Value | Upper bound | Type |

INFO - 13:57:22: +-------------+-------------+-------+-------------+-------+

INFO - 13:57:22: | x_shared[0] | 0.01 | 0.05 | 0.09 | float |

INFO - 13:57:22: | x_shared[1] | 30000 | 45000 | 60000 | float |

INFO - 13:57:22: | x_shared[2] | 1.4 | 1.6 | 1.8 | float |

INFO - 13:57:22: | x_shared[3] | 2.5 | 5.5 | 8.5 | float |

INFO - 13:57:22: | x_shared[4] | 40 | 55 | 70 | float |

INFO - 13:57:22: | x_shared[5] | 500 | 1000 | 1500 | float |

INFO - 13:57:22: | x_1[0] | 0.1 | 0.25 | 0.4 | float |

INFO - 13:57:22: | x_1[1] | 0.75 | 1 | 1.25 | float |

INFO - 13:57:22: | x_2 | 0.75 | 1 | 1.25 | float |

INFO - 13:57:22: | x_3 | 0.1 | 0.5 | 1 | float |

INFO - 13:57:22: +-------------+-------------+-------+-------------+-------+

INFO - 13:57:22: Solving optimization problem with algorithm SLSQP:

INFO - 13:57:22: 10%|█ | 1/10 [00:00<00:00, 10.63 it/sec, obj=-536]

INFO - 13:57:22: 20%|██ | 2/10 [00:00<00:01, 7.34 it/sec, obj=-2.12e+3]

WARNING - 13:57:22: MDAJacobi has reached its maximum number of iterations but the normed residual 1.7130677857005655e-05 is still above the tolerance 1e-06.

INFO - 13:57:22: 30%|███ | 3/10 [00:00<00:01, 6.20 it/sec, obj=-3.75e+3]

INFO - 13:57:22: 40%|████ | 4/10 [00:00<00:01, 5.92 it/sec, obj=-3.96e+3]

INFO - 13:57:22: 50%|█████ | 5/10 [00:00<00:00, 5.77 it/sec, obj=-3.96e+3]

INFO - 13:57:22: Optimization result:

INFO - 13:57:22: Optimizer info:

INFO - 13:57:22: Status: 8

INFO - 13:57:22: Message: Positive directional derivative for linesearch

INFO - 13:57:22: Number of calls to the objective function by the optimizer: 6

INFO - 13:57:22: Solution:

INFO - 13:57:22: The solution is feasible.

INFO - 13:57:22: Objective: -3963.408265187933

INFO - 13:57:22: Standardized constraints:

INFO - 13:57:22: g_1 = [-0.01806104 -0.03334642 -0.04424946 -0.0518346 -0.05732607 -0.13720865

INFO - 13:57:22: -0.10279135]

INFO - 13:57:22: g_2 = 3.333278582928756e-06

INFO - 13:57:22: g_3 = [-7.67181773e-01 -2.32818227e-01 8.30379541e-07 -1.83255000e-01]

INFO - 13:57:22: Design space:

INFO - 13:57:22: +-------------+-------------+---------------------+-------------+-------+

INFO - 13:57:22: | Name | Lower bound | Value | Upper bound | Type |

INFO - 13:57:22: +-------------+-------------+---------------------+-------------+-------+

INFO - 13:57:22: | x_shared[0] | 0.01 | 0.06000083331964572 | 0.09 | float |

INFO - 13:57:22: | x_shared[1] | 30000 | 60000 | 60000 | float |

INFO - 13:57:22: | x_shared[2] | 1.4 | 1.4 | 1.8 | float |

INFO - 13:57:22: | x_shared[3] | 2.5 | 2.5 | 8.5 | float |

INFO - 13:57:22: | x_shared[4] | 40 | 70 | 70 | float |

INFO - 13:57:22: | x_shared[5] | 500 | 1500 | 1500 | float |

INFO - 13:57:22: | x_1[0] | 0.1 | 0.4 | 0.4 | float |

INFO - 13:57:22: | x_1[1] | 0.75 | 0.75 | 1.25 | float |

INFO - 13:57:22: | x_2 | 0.75 | 0.75 | 1.25 | float |

INFO - 13:57:22: | x_3 | 0.1 | 0.1562448753887276 | 1 | float |

INFO - 13:57:22: +-------------+-------------+---------------------+-------------+-------+

INFO - 13:57:22: *** End MDOScenario execution (time: 0:00:00.991414) ***

{'max_iter': 10, 'algo': 'SLSQP'}

Post-process scenario¶

Lastly, we post-process the scenario by means of the QuadApprox

plot which performs a quadratic approximation of a given function

from an optimization history and plot the results as cuts of the

approximation.

Tip

Each post-processing method requires different inputs and offers a variety

of customization options. Use the high-level function

get_post_processing_options_schema() to print a table with

the options for any post-processing algorithm.

Or refer to our dedicated page:

Post-processing algorithms.

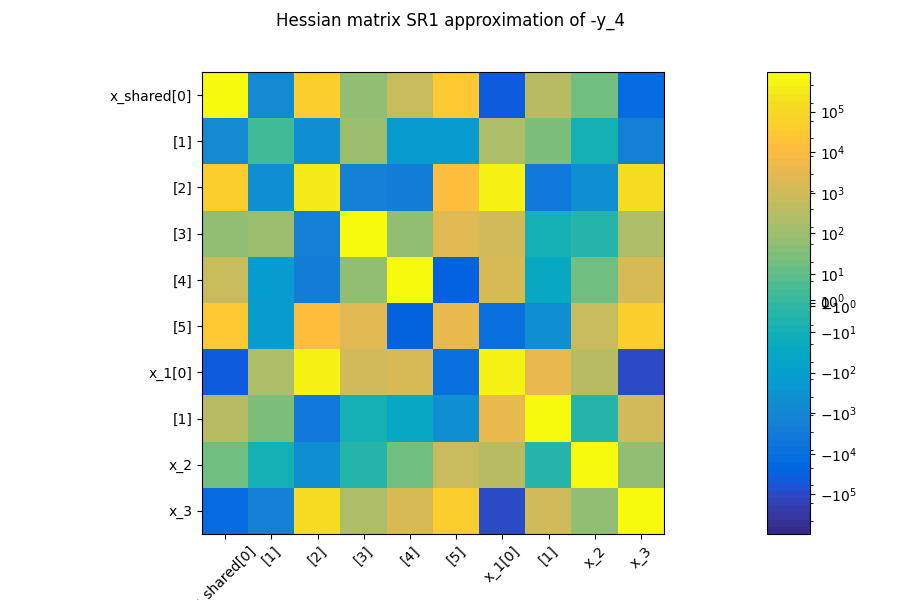

The first plot shows an approximation of the Hessian matrix \(\frac{\partial^2 f}{\partial x_i \partial x_j}\) based on the Symmetric Rank 1 method (SR1) [NW06]. The color map uses a symmetric logarithmic (symlog) scale. This plots the cross influence of the design variables on the objective function or constraints. For instance, on the last figure, the maximal second-order sensitivity is \(\frac{\partial^2 -y_4}{\partial^2 x_0} = 2.10^5\), which means that the \(x_0\) is the most influential variable. Then, the cross derivative \(\frac{\partial^2 -y_4}{\partial x_0 \partial x_2} = 5.10^4\) is positive and relatively high compared to the previous one but the combined effects of \(x_0\) and \(x_2\) are non-negligible in comparison.

scenario.post_process("QuadApprox", function="-y_4", save=False, show=True)

<gemseo.post.quad_approx.QuadApprox object at 0x7f2d0ea2eb20>

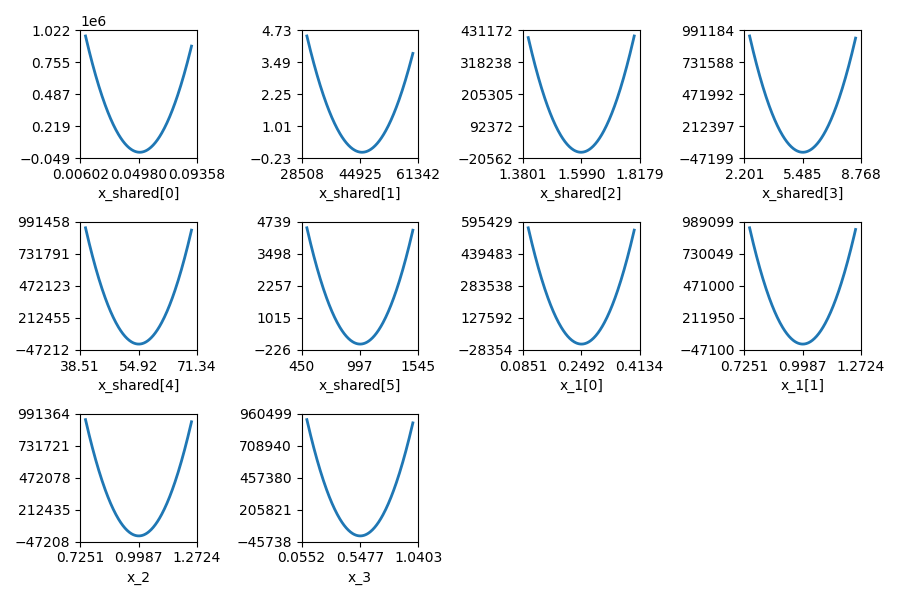

The second plot represents the quadratic approximation of the objective around the optimal solution : \(a_{i}(t)=0.5 (t-x^*_i)^2 \frac{\partial^2 f}{\partial x_i^2} + (t-x^*_i) \frac{\partial f}{\partial x_i} + f(x^*)\), where \(x^*\) is the optimal solution. This approximation highlights the sensitivity of the objective function with respect to the design variables: we notice that the design variables \(x\_1, x\_5, x\_6\) have little influence , whereas \(x\_0, x\_2, x\_9\) have a huge influence on the objective. This trend is also noted in the diagonal terms of the Hessian matrix \(\frac{\partial^2 f}{\partial x_i^2}\).

Total running time of the script: (0 minutes 1.898 seconds)