Note

Go to the end to download the full example code

Mixture of experts with PCA on Burgers dataset¶

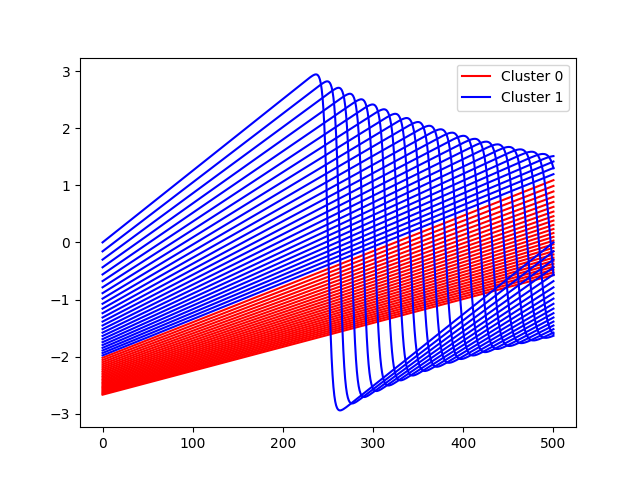

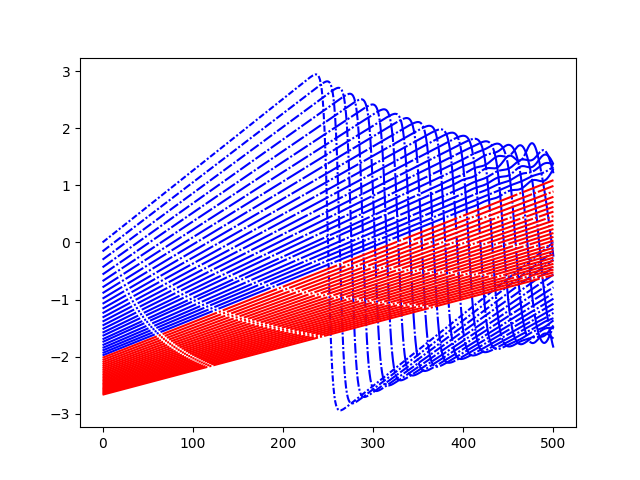

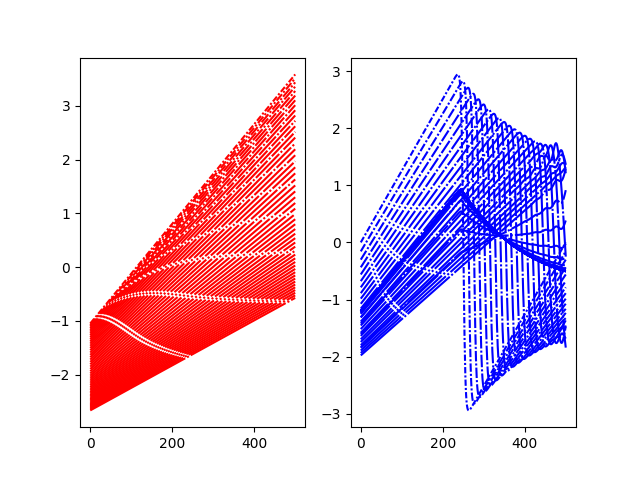

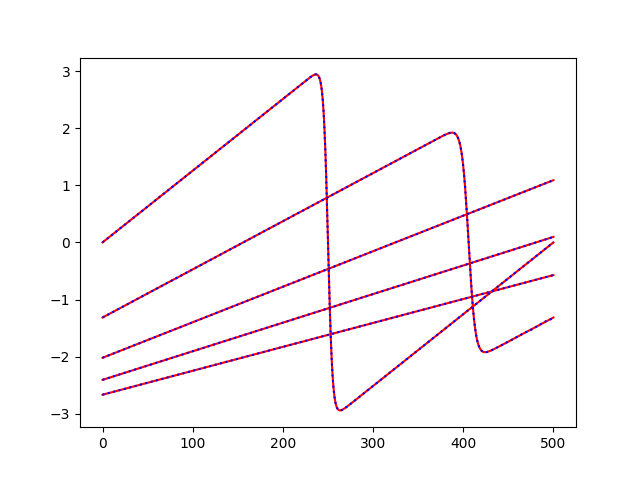

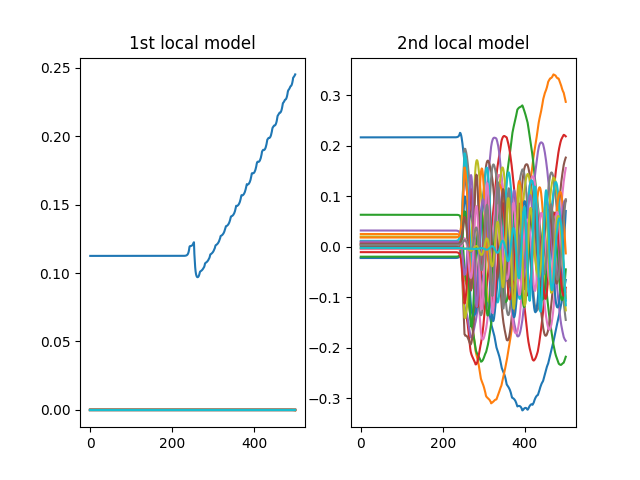

In this demo, we apply a mixture of experts regression model to the Burgers dataset. In order to reduce the output dimension, we apply a PCA to the outputs.

Imports¶

Import from standard libraries and GEMSEO.

from __future__ import annotations

import matplotlib.pyplot as plt

from matplotlib.lines import Line2D

from numpy import nonzero

from gemseo import configure_logger

from gemseo import create_benchmark_dataset

from gemseo.mlearning import create_regression_model

configure_logger()

<RootLogger root (INFO)>

Load dataset (Burgers)¶

n_samples = 50

dataset = create_benchmark_dataset("BurgersDataset", n_samples=n_samples)

inputs = dataset.input_dataset.to_numpy()

outputs = dataset.output_dataset.to_numpy()

Mixture of experts (MoE)¶

In this section we load a mixture of experts regression model through the machine learning API, using clustering, classification and regression models.

Mixture of experts model¶

We construct the MoE model using the predefined parameters, and fit the model to the dataset through the learn() method.

model = create_regression_model("MOERegressor", dataset)

model.set_clusterer("KMeans", n_clusters=2, transformer={"outputs": "JamesonSensor"})

model.set_classifier("KNNClassifier", n_neighbors=3)

model.set_regressor(

"GaussianProcessRegressor", transformer={"outputs": ("PCA", {"n_components": 20})}

)

model.learn()

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value

/home/docs/checkouts/readthedocs.org/user_builds/gemseo/envs/stable/lib/python3.9/site-packages/gemseo/datasets/dataset.py:490: PerformanceWarning: DataFrame is highly fragmented. This is usually the result of calling `frame.insert` many times, which has poor performance. Consider joining all columns at once using pd.concat(axis=1) instead. To get a de-fragmented frame, use `newframe = frame.copy()`

self[columns] = value